MERGE

Datasets and Extracted Features:

- MERGE bimodal audio-lyrics dataset (2024)

We created a new bimodal audio-lyrics dataset, annotated with Russell's four quadrants and continuous continuous arousal-valence . It contains 3554 audio clips and 2568 lyrics.

If you use it, please cite the following article(s):

Louro P. L., Redinho H., Ribeiro T. T. F., Santos R, Malheiro R., Panda R. & Paiva R. P. (2025). “MERGE - A Bimodal Audio-Lyrics Dataset for Static Music Emotion Recognition”.preprint. [Online]. Available:

http://arxiv.org/abs/2407.06060.

Louro P. L., Redinho H., Ribeiro T. T. F., Santos R, Malheiro R., Panda R. & Paiva R. P. (2025). “MERGE - A Bimodal Audio-Lyrics Dataset for Static Music Emotion Recognition”.preprint. [Online]. Available:

http://arxiv.org/abs/2407.06060.

Software:

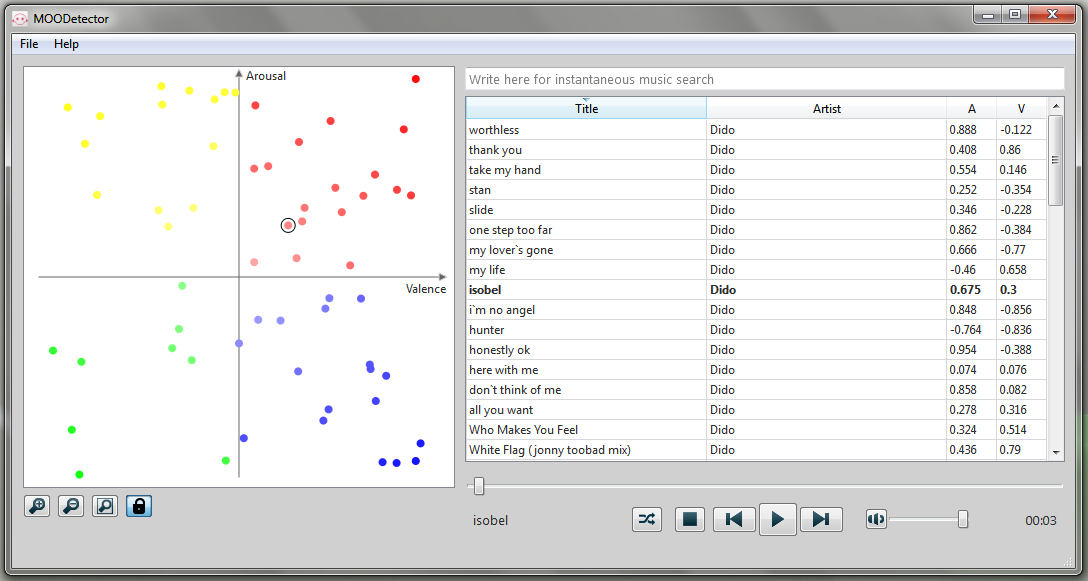

MOODetector

Datasets and Extracted Features:

- 4Q audio emotion dataset (Russell's model) (2018)

Download dataset

Download featuresWe created a new 4-quadrant audio emotion dataset. It contains 900 audio clips, annotated into 4 quadrants, according to Russell's model.

If you use it, please cite the following article(s):

Panda R., Malheiro R. & Paiva R. P. (2018). “Novel audio features for music emotion recognition”. IEEE Transactions on Affective Computing (IEEE early access). DOI: 10.1109/TAFFC.2018.2820691.

Panda R., Malheiro R. & Paiva R. P. (2018). “Novel audio features for music emotion recognition”. IEEE Transactions on Affective Computing (IEEE early access). DOI: 10.1109/TAFFC.2018.2820691. Panda R., Malheiro R., Paiva R. P. (2018). “Musical Texture and Expressivity Features for Music Emotion Recognition”. 19th International Society for Music Information Retrieval Conference – ISMIR 2018, Paris, France.

Panda R., Malheiro R., Paiva R. P. (2018). “Musical Texture and Expressivity Features for Music Emotion Recognition”. 19th International Society for Music Information Retrieval Conference – ISMIR 2018, Paris, France. - Bi-modal (audio and lyrics) emotion dataset (Russell's model) (2016)

We created a new bi-modal (audio and lyrics) emotion dataset. It contains 133 audio clips and lyrics, manually annotated into 4 quadrants, according to Russell's model.

If you use it, please cite the following article:

Malheiro R., Panda R., Gomes P. & Paiva R. P. (2016). “Bi-Modal Music Emotion Recognition: Novel Lyrical Features and Dataset”. 9th International Workshop on Music and Machine Learning – MML'2016 – in conjunction with the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases – ECML/PKDD 2016, Riva del Garda, Italy.

Malheiro R., Panda R., Gomes P. & Paiva R. P. (2016). “Bi-Modal Music Emotion Recognition: Novel Lyrical Features and Dataset”. 9th International Workshop on Music and Machine Learning – MML'2016 – in conjunction with the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases – ECML/PKDD 2016, Riva del Garda, Italy. - Lyrics emotion sentences dataset (Russell's model) (2016)

We created a new sentence-based lyrics emotion dataset, for Lyrics Emotion Variation Detection research. It contains a total of 368 sentences manually annotated into 4 quadrants (based on Russell's model). The datset was split into a 129-sentences training dataset and a 239-sentences testing dataset. It also contains an emotion dictionary with 1246 words.

If you use it, please cite the following thesis:

Ricardo Malheiro (2017). “Emotion-based Analysis and Classification of Music Lyrics“. Doctoral Program in Information Science and Technology. University of Coimbra.

Ricardo Malheiro (2017). “Emotion-based Analysis and Classification of Music Lyrics“. Doctoral Program in Information Science and Technology. University of Coimbra. - Lyrics emotion dataset (Russell's model) (2016)

Download dataset

Download featuresWe created a new lyrics emotion dataset. It contains two parts: i) a 180-lyrics dataset manually annotated with arousal and valence values (based on Russell's model); ii) a 771-lyrics dataset annotated in 4 quadrants (Russell's model), based on AllMusic tags.

If you use it, please cite the following article:

Malheiro R., Panda R., Gomes P. & Paiva R. P. (2018). “Emotionally-Relevant Features for Classification and Regression of Music Lyrics”. IEEE Transactions on Affective Computing, Vol. 9(2), pp. 240-254, doi:10.1109/TAFFC.2016.2598569.

Malheiro R., Panda R., Gomes P. & Paiva R. P. (2018). “Emotionally-Relevant Features for Classification and Regression of Music Lyrics”. IEEE Transactions on Affective Computing, Vol. 9(2), pp. 240-254, doi:10.1109/TAFFC.2016.2598569. - Multi-modal MIREX-like emotion dataset (2013)

We created a new multi-modal MIREX-like emotion dataset. It contains 903 audio clips (30-sec), 764 lyrics and 193 midis. To the best of our knowledge, this is the first emotion dataset containing those 3 sources (audio, lyrics and MIDI).

If you use it, please cite the following article:

Panda R., Malheiro R., Rocha B., Oliveira A. & Paiva R. P. (2013). “Multi-Modal Music Emotion Recognition: A New Dataset, Methodology and Comparative Analysis”. 10th International Symposium on Computer Music Multidisciplinary Research – CMMR'2013, Marseille, France.

Panda R., Malheiro R., Rocha B., Oliveira A. & Paiva R. P. (2013). “Multi-Modal Music Emotion Recognition: A New Dataset, Methodology and Comparative Analysis”. 10th International Symposium on Computer Music Multidisciplinary Research – CMMR'2013, Marseille, France.

Software:

- Lyrics Feature Extraction System (2022)

We created a lyrics feature extractor system based on the contributions described in our article:

Malheiro R., Panda R., Gomes P. & Paiva R. P. (2018). “Emotionally-Relevant Features for Classification and Regression of Music Lyrics”. IEEE Transactions on Affective Computing, Vol. 9(2), pp. 240-254, doi:10.1109/TAFFC.2016.2598569.

Malheiro R., Panda R., Gomes P. & Paiva R. P. (2018). “Emotionally-Relevant Features for Classification and Regression of Music Lyrics”. IEEE Transactions on Affective Computing, Vol. 9(2), pp. 240-254, doi:10.1109/TAFFC.2016.2598569. - MOODetector Reloaded (2016)

Our MOODetector prototype is being updated with a new user interface and, most importantly, a more accurate regression model. We will make the sofware public as soon as it is stable.

- MOODetector (2011)

We created a prototype application for Windows (XP and above)

- Updates:

- March 1, 2012: Mood tracking bugs corrected

Note: Having problems running the application? Install the Visual Studio Redistributable Package.

This is a prototype application, aiming to work as a proof-of-concept for Music Emotion Recognition and Music Emotion Variation Detection. Naturally, it has several limitations, particularly regarding valence prediction accuracy. It is built on top of Marsyas, which, despite its many virtues, seems to be lacking features that might be more relevant for valence prediction. In fact, the MIR Toolbox showed increased accuracy (see our publications for more information). Therefore, in the future we plan to extend the prototype with more relevant features, both from the MIR toolbox and others resulting from our research.

For a detailed description of the MOODetector application prototype, please check this article:

Cardoso L., Panda R and Paiva R. P. (2011). “MOODetector: A Prototype Software Tool for Mood-based Playlist Generation”. Simpósio de Informática – INForum 2011, Coimbra, Portugal.

Cardoso L., Panda R and Paiva R. P. (2011). “MOODetector: A Prototype Software Tool for Mood-based Playlist Generation”. Simpósio de Informática – INForum 2011, Coimbra, Portugal. - Updates: